“What’s the best evaluation cadence for analytics-based QA teams?”

-

Hello Insight

- 10 min read

In any analytics-driven team, the evaluation cadence serves as a crucial framework guiding quality assurance (QA) activities. A well-defined cadence ensures that teams remain aligned and focused on improving their processes, thereby enhancing overall productivity. Understanding the optimal QA evaluation cadence can significantly impact a team's efficiency and effectiveness in delivering high-quality outputs.

Establishing this cadence requires careful consideration of various factors, including team dynamics and project complexity. Regular evaluations and adjustments can help identify areas for improvement, fostering a culture of continuous enhancement. Therefore, the journey towards achieving optimal QA evaluation cadence is not just about setting timelines; it’s about creating a robust foundation for analytics-based teams to thrive.

Extract insights from Customer & Employee Interviews. At Scale.

Why Setting an Optimal QA Evaluation Cadence is Crucial

Setting an Optimal QA Evaluation Cadence is crucial for maintaining quality and efficiency within analytics-based QA teams. Establishing a timely evaluation process ensures that evaluations occur at regular intervals, allowing teams to catch issues before they escalate and facilitating continuous improvement. This optimal cadence fosters accountability and encourages team members to adhere to set standards, making it easier to identify patterns and areas for enhancement.

Factors such as project complexity and team dynamics significantly influence this evaluation cadence. If projects are intricate, more frequent evaluations may be necessary to ensure quality. Conversely, smaller teams may benefit from less frequent evaluations to maintain productivity without overwhelming team members. Ultimately, a well-defined evaluation cadence leads to improved software quality and enhanced overall performance. By carefully considering these factors and designing an effective evaluation timeline, QA teams can achieve better results and foster a culture of excellence.

Factors Influencing Evaluation Cadence

Several factors influence the optimal QA evaluation cadence for analytics-based QA teams. Project complexity plays a vital role; intricate projects often require more frequent evaluations to address emerging issues and ensure quality metrics are met. Conversely, simpler projects might benefit from a less rigorous schedule, allowing the team to focus on value-added tasks instead of excessive evaluations.

Team size and dynamics also significantly impact evaluation cadence. Larger teams may need regular assessments to track performance and ensure alignment with goals effectively. In contrast, smaller, highly collaborative teams might thrive on a more flexible evaluation schedule, prioritizing continuous feedback over structured evaluations. Striking the right balance is crucial for maintaining productivity and quality, enabling teams to adapt to changes in project demands while ensuring that quality assurance remains a priority.

- Project Complexity

In addressing project complexity, it’s essential to recognize its impact on the Optimal QA Evaluation Cadence. Complex projects often involve multiple components, dependencies, and iterations. Accordingly, the evaluation cadence must be adaptable to account for these intricacies. This flexibility is crucial for maintaining the quality of deliverables while navigating through the various project layers and requirements.

Furthermore, the size and makeup of your analytics-based QA team play a significant role. A well-coordinated team can tackle complex projects with agility, allowing for rapid iteration and timely evaluations. Conversely, a team that struggles with communication and alignment may find project complexity overwhelming, leading to missed opportunities for quality assurance. Ultimately, both the nature of the project and team dynamics should guide the establishment of an effective evaluation cadence that accommodates varying levels of complexity.

- Team Size and Dynamics

The size and dynamics of a QA team significantly influence the Optimal QA Evaluation Cadence. A well-balanced team with a mix of experience levels can adapt more easily to continuous feedback and iterative improvements. Larger teams may require a more structured cadence to manage evaluation processes effectively, ensuring that all members can collaborate efficiently without overwhelming any single individual. In contrast, smaller teams can adopt a more flexible approach, allowing for quicker adjustments based on immediate project needs or challenges.

Understanding team dynamics is crucial to achieving the desired evaluation outcomes. Teams that foster open communication and collaboration tend to perform better, as they can share insights and lessons learned during evaluations. Additionally, varied perspectives within the team can lead to more comprehensive assessments, ultimately leading to improved software quality. Tailoring the evaluation cadence to suit the specific characteristics of the team will enhance overall productivity and support continuous improvement in QA practices.

Consequences of Poor Evaluation Cadence

Poor evaluation cadence can significantly hinder a team’s effectiveness and overall project success. When evaluation processes are infrequent or inconsistent, it leads to decreased productivity among team members. They may feel directionless without regular feedback, ultimately causing delays in project timelines. This lack of engagement can breed frustration and diminish team morale, impacting their motivation and commitment.

Additionally, a suboptimal QA evaluation cadence directly negatively affects software quality. Insufficient and sporadic evaluations mean that critical bugs and issues may go unnoticed during the development lifecycle. This can result in costly fixes down the line, affecting user satisfaction and trust. Therefore, establishing an optimal QA evaluation cadence becomes essential for ensuring consistent feedback and maintaining high-quality standards throughout the project lifespan. Continuous reviews foster a proactive approach, allowing teams to adapt swiftly and improve overall performance.

- Decreased Productivity

Decreased productivity can significantly hinder the ability of analytics-based QA teams to deliver quality results. When the evaluation cadence isn't optimal, team members may find themselves overwhelmed with tasks that require reevaluation, leading to frequent context-switching. This disrupts their workflow and diminishes focus, ultimately resulting in slower output and compromised quality. A balanced cadence fosters a supportive environment that leads to better collaboration, where team members can effectively manage their time and workload without becoming bogged down.

Additionally, the impact of an improper evaluation cadence extends beyond individual productivity. As team members struggle with task backlog and miscommunication, the potential for errors in the software testing process increases. Deadlines may slip, and critical issues might go unnoticed, further exacerbating delays. To counteract these challenges, establishing an optimal QA evaluation cadence allows teams to work more efficiently, fostering a culture of continuous improvement and enhancing both team morale and software quality.

- Reduced Software Quality

Inadequate evaluation processes can lead to significant challenges in software development, particularly in reduced software quality. When the evaluation cadence is infrequent or poorly structured, critical issues can go unnoticed. This oversight can translate into bugs and performance problems that undermine user experience, potentially damaging user trust and satisfaction.

To improve software quality, analytics-based QA teams should adopt a consistent evaluation cadence. A well-defined cadence includes regular check-ins, thorough analysis of performance metrics, and ongoing adjustment strategies. By prioritizing these practices, teams can catch defects early, ensure compliance with quality standards, and maintain a high level of software integrity. Ultimately, addressing the frequency and structure of evaluations is essential for achieving optimal software performance and fostering a culture of continuous improvement.

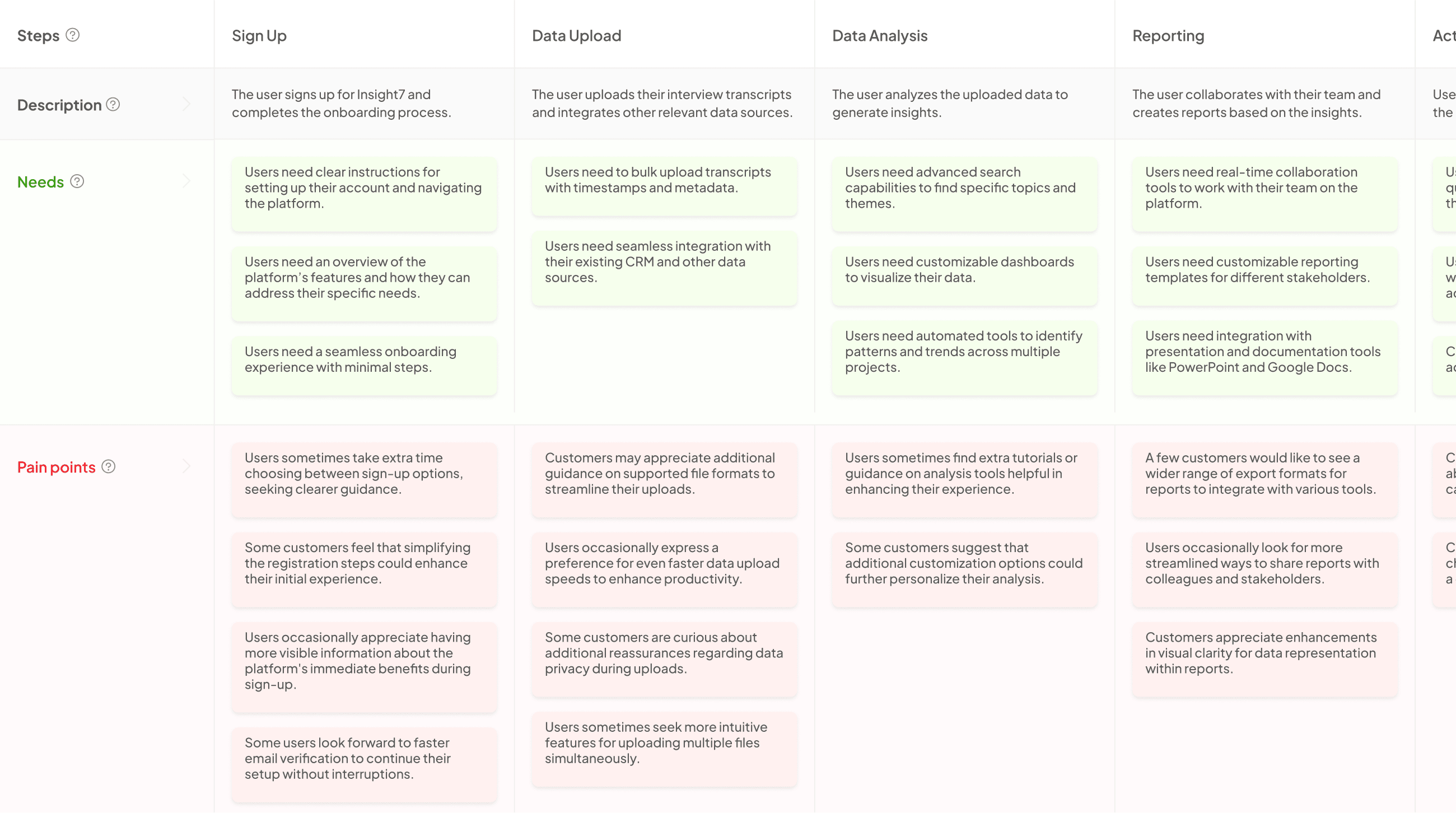

Generate Journey maps, Mind maps, Bar charts and more from your data in Minutes

Designing an Optimal QA Evaluation Cadence

Designing an optimal QA evaluation cadence is crucial for analytics-based teams aiming to maintain high standards. The first step involves assessing the current workflow. Identify existing processes and bottlenecks that hinder efficiency. This understanding helps craft a tailored approach to evaluations.

Next, define clear objectives and key performance indicators (KPIs) that align with broader business goals. Establishing measurable targets allows teams to focus their efforts on areas that drive improvement. Finally, implement the evaluation cadence and iterate based on initial outcomes. This includes creating a continuous feedback loop that enables teams to refine their processes over time. By allowing flexibility and responsiveness in your evaluation cadence, you empower your team to adapt and thrive in a dynamic environment. Ultimately, an optimized cadence fosters quality assurance, enhances productivity, and ensures that the analytics-driven insights remain actionable and impactful.

Step 1: Assess the Current Workflow

To establish an Optimal QA Evaluation Cadence, the first essential step is to assess the current workflow. This involves thorough analysis of existing QA processes to pinpoint inefficiencies and bottlenecks. Gathering input from team members can reveal insights into the challenges they face and help identify areas needing improvement.

Begin by mapping out the current processes for analytics-based QA teams. Identify each stage, from project inception to final testing, and highlight any delays or redundancies. This assessment ensures that you understand how each component contributes to overall productivity and software quality. Implementing this analysis lays the foundation for further steps towards optimizing your QA evaluation cadence and can dramatically enhance team effectiveness and overall project outcomes. Regular reassessments will keep your workflow agile and responsive to changing needs and challenges.

- Evaluate existing processes and identify bottlenecks.

To evaluate existing processes and identify bottlenecks, analytics-based QA teams should start by conducting a thorough audit of their current workflows. This involves mapping out each step within the evaluation process to spot inefficiencies that could slow down performance and impact quality. By analyzing how evaluations are conducted, teams can pinpoint specific stages that may cause delays, such as lengthy review periods or miscommunication among team members.

Next, key metrics should be established to monitor these identified bottlenecks. Metrics might include evaluation turnaround time and adherence to established guidelines. The goal is to ensure that the optimal QA evaluation cadence aligns closely with these metrics, allowing for a streamlined process that not only improves efficiency but also enhances overall service quality. Regularly revisiting and refining the evaluation process can lead to significant improvements, ensuring resources are used effectively and client satisfaction is maintained.

Step 2: Define Clear Objectives and KPIs

Defining clear objectives and key performance indicators (KPIs) is essential for establishing an optimal QA evaluation cadence. To begin, it is vital to identify specific goals that align with your overall business outcomes. These objectives should reflect the quality standards you aim to achieve and the timelines within which you wish to operate. Clear objectives provide a roadmap, guiding your team towards desired results while enhancing project focus.

Next, KPIs serve as measurable indicators of success. They allow teams to track progress and evaluate the effectiveness of implemented strategies. Common KPIs for analytics-based QA teams may include defect density, test coverage, and sprint velocity. Regularly reviewing these indicators ensures that your evaluation cadence not only remains relevant but also adapts to evolving project demands, fostering continuous improvement in quality assurance practices.

- Set measurable goals that align with business outcomes.

Setting measurable goals that align with business outcomes is essential for the success of analytics-based QA teams. These goals serve as the driving force behind performance measurements, ensuring that the efforts made are directly tied to business objectives. By establishing clear, specific, and time-bound goals, teams can maintain focus and clarity on their purpose, enabling them to deliver impactful results.

💬 Questions about “What’s the best evaluation cadence for analytics-based QA teams?”?

Our team typically responds within minutes

When aligning goals with business outcomes, it is vital to consider key performance indicators (KPIs) that reflect both quality and efficiency. For instance, tracking error rates or customer satisfaction can highlight areas for improvement. Regularly reviewing these metrics ensures that the evaluation cadence remains optimal, allowing teams to adapt their strategies based on real-time feedback. This alignment not only enhances productivity but also reinforces the importance of quality in achieving broader organizational success.

Step 3: Implement and Iterate

To achieve an effective evaluation cadence for analytics-based QA teams, implementing a structured approach is essential. Begin by integrating feedback mechanisms within the initial implementation phase. This allows you to gather insights on the effectiveness of new processes while identifying areas for improvement. The focus should be on fostering collaboration among team members to refine workflows. By addressing pain points quickly, you increase the likelihood of maintaining an optimal QA evaluation cadence.

After establishing the initial processes, it’s crucial to create a continuous feedback loop. This involves regularly assessing the impact of implemented changes and making necessary adjustments. Encourage team members to share their experiences, as their insights can lead to innovative solutions. By embracing an iterative approach, your QA team can continually adapt to new challenges and enhance overall productivity, ensuring that the evaluation cadence remains aligned with both team goals and project demands.

- Initial Implementation Phase

The Initial Implementation Phase sets the foundation for establishing an optimal QA evaluation cadence. During this phase, it is essential to gather relevant information and configure the system according to specific project requirements. Engaging team members in discussions about compliance and criteria can streamline the process and prepare for future evaluations. Identifying the specific needs at this stage will significantly enhance the effectiveness of the evaluation cadence later on.

Additionally, the initial phase involves setting clear objectives and determining key performance indicators (KPIs). These metrics will guide the team in assessing their progress and aligning their work with overall business goals. As team members familiarize themselves with the new tools and processes, a continuous feedback loop will emerge. This allows for adjustments and improvements, ensuring that the evaluation cadence remains optimal and responsive to evolving project demands.

- Continuous Feedback Loop

A Continuous Feedback Loop is essential in establishing an Optimal QA Evaluation Cadence for analytics-based QA teams. This iterative process allows teams to gather insights not only from completed evaluations but also during ongoing workflows. By consistently engaging with feedback from team members and project stakeholders, teams can adapt their strategies in real time.

To build an effective Continuous Feedback Loop, consider the following key components:

- Regular Check-Ins: Schedule frequent meetings to discuss feedback and insights from recent evaluations.

- Real-Time Data Analysis: Utilize analytics tools to track performance and detect issues as they arise rather than waiting for the next evaluation cycle.

- Iterative Testing: Implement testing phases that allow for quick adjustments based on feedback, ensuring that quality improvements are continual and not sporadic.

Incorporating these practices helps maintain high software standards and enhances team efficiency, ensuring that everyone remains aligned and aware of project changes. Continuous feedback ultimately drives better decision-making and fosters a culture of excellence within QA teams.

Leveraging Tools for Optimal QA Evaluation Cadence

To achieve an optimal QA evaluation cadence, utilizing the right tools is essential. First, consider implementing robust software that can record, transcribe, and analyze data seamlessly. Tools like insight7 streamline the evaluation process by offering customizable templates for various QA assessments. These templates allow teams to systematically check for compliance while analyzing transcriptions against predefined criteria.

Next, integrating project management tools such as Jira and TestRail enhances collaboration and communication among QA teams. These platforms facilitate tracking of issues and progress, enabling teams to stay aligned with project goals. Additionally, leveraging analytics tools helps in extracting valuable insights from data, which can continually inform and improve the QA evaluation cadence. By employing these tools effectively, teams not only enhance operational efficiency but also elevate the overall quality of software products. Implementing a comprehensive toolset is crucial for achieving an optimal QA evaluation cadence.

Recommended Tools for Streamlining Evaluation Processes

To enhance the evaluation processes within analytics-based QA teams, utilizing the right tools is crucial for establishing an optimal QA evaluation cadence. Tools like Insight7 and Jira provide comprehensive solutions for tracking and managing quality assurance workflows. Insight7 stands out by offering features to record, transcribe, and analyze data at scale, making it easy to extract actionable insights from evaluation metrics.

Moreover, incorporating tools like TestRail, Qmetry, and Zephyr can further streamline the evaluation process. These platforms allow teams to create test cases, manage testing processes, and analyze results efficiently. By integrating these tools, teams can reduce manual workload and enhance collaboration, ensuring a more consistent and effective evaluation cycle. Ultimately, implementing these recommended tools fosters a structured approach, enhancing the overall quality of software while meeting organizational goals.

- insight7

Developing an Optimal QA Evaluation Cadence is critical for analytics-based QA teams aiming to enhance performance. First, establish a rhythm that accommodates project complexity, team dynamics, and deadlines. Frequent evaluations offer visibility into challenges and improvements, ensuring teams remain aligned with project goals. This structured approach sharpens focus and boosts productivity even in fast-paced environments.

Next, continuous iteration is fundamental. An initial evaluation should lead to actionable insights, allowing teams to refine their processes based on real-time feedback. Each cycle creates opportunities for learning, helping teams adapt to evolving circumstances and improving overall software quality. By prioritizing open communication and resourceful tools, teams can foster a robust framework for ongoing improvement. Ultimately, implementing an optimal evaluation cadence empowers QA teams to stay ahead and optimize their testing processes effectively.

- Jira

Jira serves as an essential tool for QA teams aiming for an Optimal QA Evaluation Cadence. This powerful project management platform allows teams to track errors, manage tasks, and facilitate collaboration effectively. The integration of real-time feedback mechanisms through Jira fosters a culture of transparency. Teams can quickly identify areas for improvement and adjust their processes accordingly.

Implementing Jira can streamline workflow and enhance communication. For instance, QA teams can create customized dashboards to monitor task progression and deadline adherence. Additionally, the ability to generate detailed reports aids in assessing performance metrics, which is crucial for determining the optimal evaluation intervals. Ultimately, utilizing Jira can lead to more informed decision-making and ultimately improve software quality, ensuring the QA process remains agile and responsive to changing needs.

- TestRail

TestRail is a vital component in establishing an Optimal QA Evaluation Cadence. This tool offers a comprehensive solution for managing test cases, planning test cycles, and tracking results. By using TestRail, teams can streamline their evaluation processes, ensuring consistent application of test cases and accurate logging of results. This structured approach aids in identifying trends and areas for improvement, ultimately leading to enhanced software quality.

Using TestRail effectively involves integrating it with your existing workflow. Begin by customizing test plans based on project requirements and past learnings. Prioritize communication within the team, sharing insights gleaned from test results to foster a collaborative environment. Remember, a well-defined cadence accelerates the feedback loop, allowing for quicker adjustments and better alignment with project objectives. By consistently utilizing TestRail, teams can establish an evaluation rhythm that supports both quality assurance and agile methodologies, leading to a more effective QA process overall.

- Qmetry

In the context of achieving an optimal QA evaluation cadence, Qmetry presents an effective solution for analytics-based QA teams. It allows teams to efficiently manage and streamline their testing processes, thus enhancing the overall quality of software products. By integrating analytics, Qmetry empowers teams to make informed decisions based on real-time data and insights. This capability is vital, especially in complex projects where traditional evaluation methods may fall short.

To maximize the benefits of Qmetry, teams should focus on several key practices. First, regular assessments of testing strategies enable the identification of inefficiencies. Second, setting measurable goals aligned with the project objectives ensures that the evaluation cadence becomes a proactive part of the development process, rather than a reactive one. Lastly, fostering open communication across teams aids in building a collaborative environment, where insights can be quickly translated into actionable improvements. By focusing on these practices within the Qmetry framework, teams can enhance their evaluation cadence, ultimately leading to superior software quality.

- Zephyr

Zephyr plays a critical role in establishing an optimal QA evaluation cadence for analytics-based QA teams. This tool enables seamless integration within existing workflows, providing key functionalities that enhance tracking and reporting. By utilizing Zephyr, teams can efficiently manage test cases and monitor progress, ensuring that evaluations are conducted regularly and systematically.

Identifying the right cadence is essential for maximizing productivity and software quality. Zephyr supports this by offering features that allow for customizable evaluation schedules, adaptable to the unique needs of any project. As teams implement these evaluation tactics, they gain valuable insights into their testing processes, ultimately leading to continuous improvements. Thus, leveraging Zephyr in the quest for an optimal QA evaluation cadence is not just advisable; it is a necessary step towards fostering a robust analytics-driven quality assurance environment.

Conclusion: Embracing an Optimal QA Evaluation Cadence for Continuous Improvement

Establishing an optimal QA evaluation cadence is crucial for fostering continuous improvement within analytics-based teams. By aligning evaluation frequency with project needs and team dynamics, organizations can enhance productivity and software quality. A well-structured cadence allows teams to gain actionable insights, making it easier to address challenges and adapt to new information.

Moreover, an effective evaluation rhythm creates a culture of feedback and learning, enabling teams to identify issues early on. This proactive approach not only leads to improved processes but also strengthens team cohesion and performance. Embracing this cadence ultimately benefits all stakeholders, ensuring that quality assurance remains a cornerstone of organizational success.

💬 Questions about “What’s the best evaluation cadence for analytics-based QA teams?”?

Our team typically responds within minutes