In today's digital age, the reliability of artificial intelligence systems hinges on their validity. AI Validity Tools play a crucial role by assessing both internal and external factors that contribute to a system’s accuracy and generalizability. Understanding these tools helps stakeholders make informed decisions, ensuring that AI technology meets the necessary standards for trustworthiness and effectiveness. With the rapid incorporation of AI across diverse sectors, emphasizing validity becomes paramount for sustained user trust and satisfaction.

These tools serve as the foundation for evaluating AI models, identifying potential biases, and providing insights into user experiences. A clear grasp of how these validity tools operate empowers organizations to enhance the quality of AI implementations. By delving into the essential functions of AI Validity Tools, we can explore their significance and impact on achieving reliable outcomes in technology-driven projects.

Assessing AI Validity: Internal Tools

Assessing AI validity through internal tools is crucial for ensuring that AI systems function effectively and produce reliable outcomes. These tools help organizations evaluate the performance and behavior of AI models within their unique operational framework. They examine various dimensions of internal validity, such as data integrity, algorithm reliability, and user interactions, ultimately contributing to the overall effectiveness of AI applications.

There are several key internal AI validity tools to consider. First, data auditing tools are essential for ensuring that the input data remains accurate and representative of the target population. Second, performance monitoring systems can track AI behavior over time, identifying any deviations or biases that may arise. Third, user feedback mechanisms provide insights into how end-users interact with AI systems, highlighting any areas for improvement. By integrating these internal tools, organizations can foster transparency and accountability in their AI processes, ultimately bolstering trust in the technology.

Key AI Validity Tools for Internal Assessment

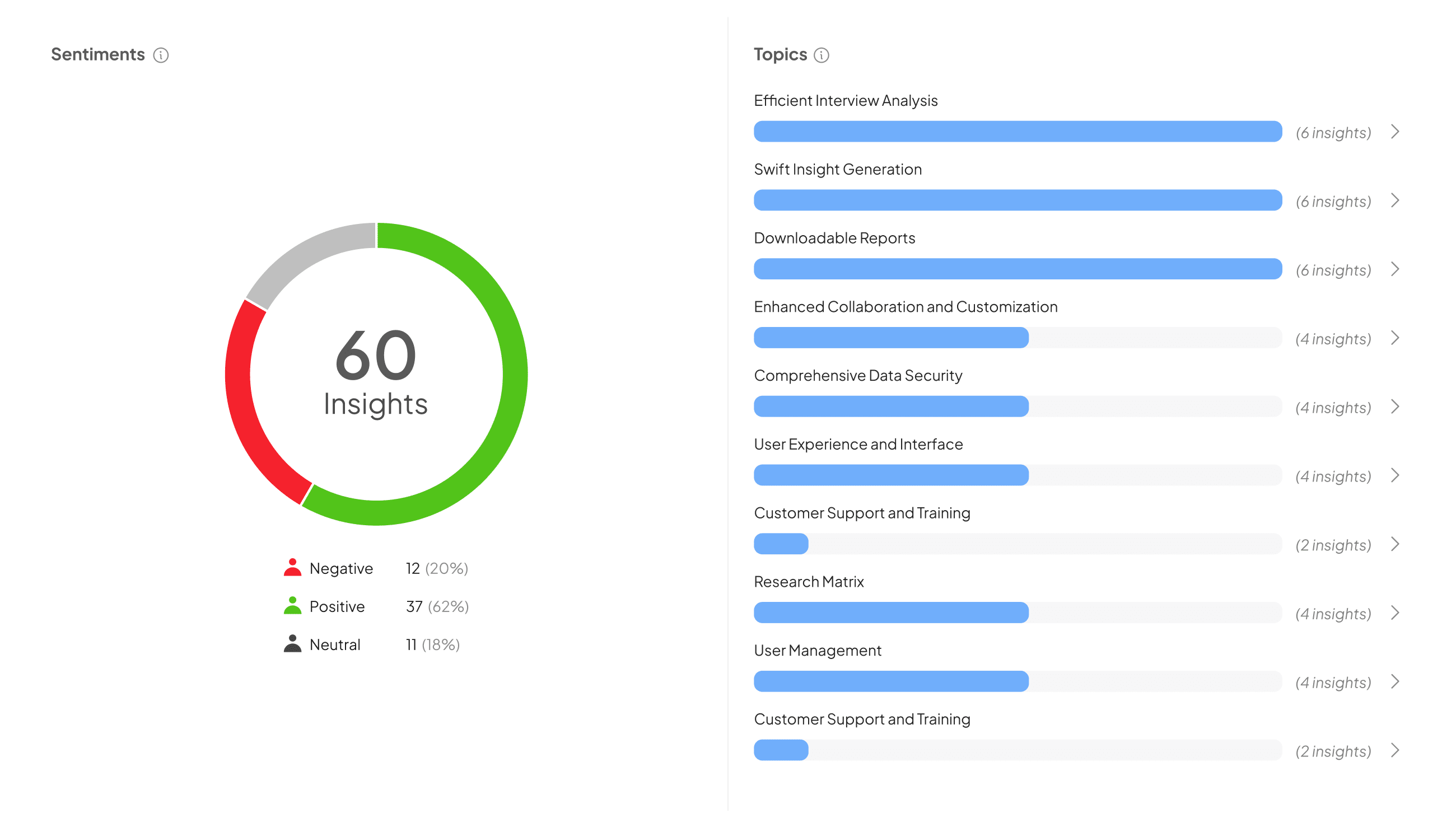

To ensure the effectiveness of AI models, various AI validity tools are essential for internal assessments. These tools streamline the validation process, helping stakeholders gain insights into the performance and reliability of AI systems. One key aspect that these tools excel at is identifying biases within datasets. Uncovering bias helps to refine algorithms, ultimately leading to more accurate and fair outcomes.

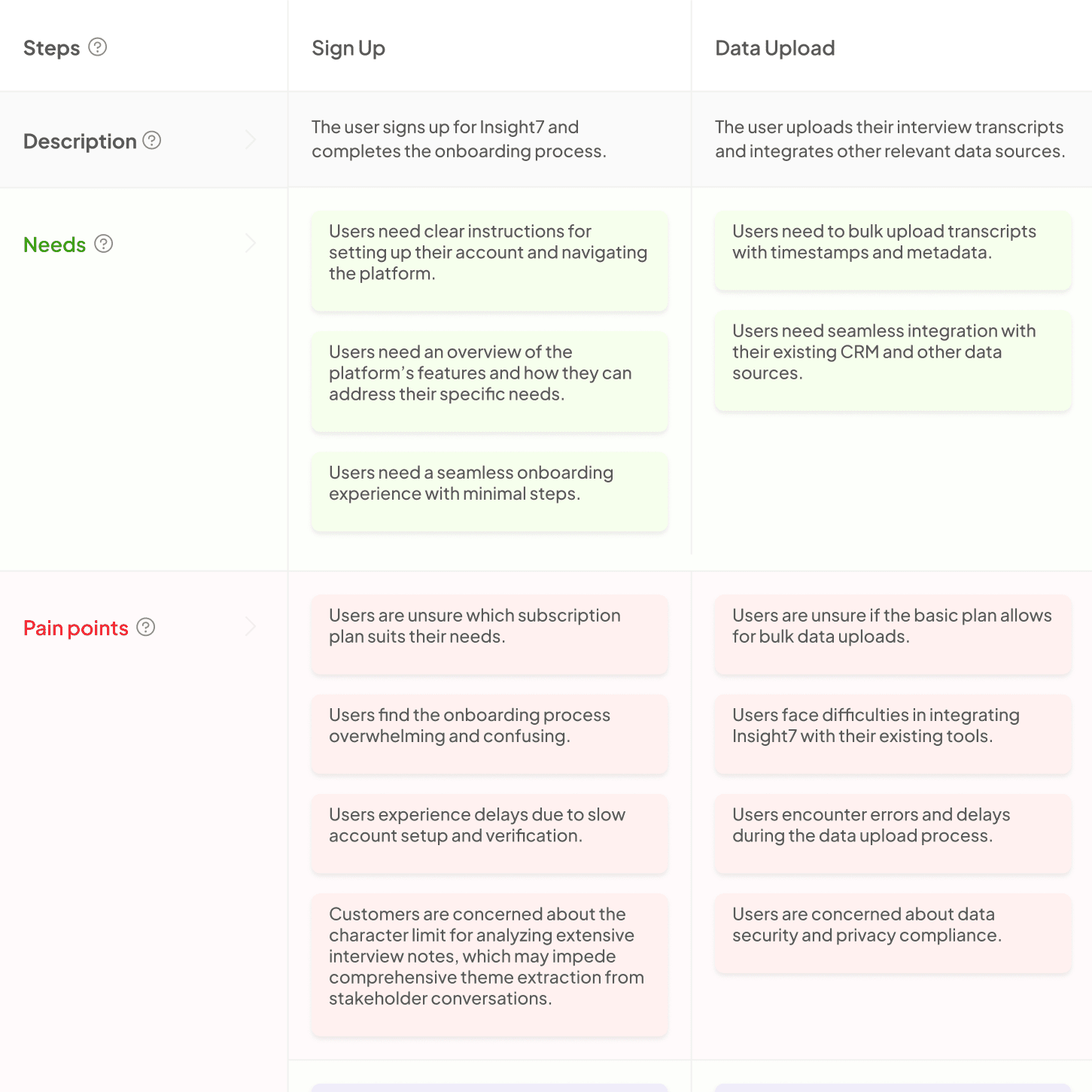

Several prominent tools offer comprehensive capabilities for internal assessments. First, research matrices allow users to input multiple queries, quickly generating relevant insights. These matrices can significantly reduce time spent on data analysis while enhancing clarity. Second, customizable dashboards facilitate tailored insights through various templates suited for specific projects, such as market research or employee engagement analysis. Finally, general query functionality enables users to probe datasets easily, generating valuable personas and insights on demand. By leveraging these AI validity tools, organizations can create robust internal assessments that enhance the overall effectiveness of their AI initiatives.

Methodologies and Best Practices

To assess the validity of AI tools effectively, employing sound methodologies and best practices is crucial. The consistency of results obtained through AI validity tools can significantly impact the overall performance and reliability of the solutions they support. One key methodology involves comprehensive testing across diverse datasets, which helps ensure that the findings are applicable to various scenarios. Additionally, it is important to conduct regular audits to refine the tools and address any discrepancies that may arise.

Best practices also involve engaging multidisciplinary teams to evaluate AI outcomes. This collaboration enhances the tools' credibility and fosters a culture of continuous improvement. Training team members on data interpretation can improve the overall analysis process as well. By consistently following these methods and practices, organizations can confidently harness AI validity tools to support their objectives, ensuring both internal and external validity assessments are thoroughly executed.

Unveiling AI Validity Tools for External Evaluation

AI Validity Tools play a crucial role in ensuring that automated systems produce sound results. These tools facilitate comprehensive external evaluations by assessing the models' performance against diverse benchmarks. This process helps organizations identify any discrepancies in their AI outputs, allowing for real-time adjustments and optimizations.

To fully benefit from AI Validity Tools, it’s essential to understand their components. First, performance metrics help quantify accuracy, precision, and recall, offering insights into model behavior. Second, bias detection tools evaluate discrepancies in outcomes among different demographic groups, ensuring fair representations. Third, transparent reporting mechanisms provide stakeholders with clear, understandable evaluations. By incorporating these elements, organizations can enhance the reliability of their AI implementations, ultimately refining their decision-making processes. This diligent approach not only fosters trust in AI systems but also maximizes their effectiveness in addressing real-world challenges.

Tools for Evaluating External AI Validity

Evaluating external AI validity requires specialized tools that ensure results are applicable and reliable. These AI validity tools help researchers and practitioners determine whether the outcomes of AI models can be effectively generalized beyond the training dataset. One essential component is using a research matrix, which organizes questions and answers in a structured format, allowing quick access to insights.

Another valuable tool is the dashboard visual experience, which includes various templates for different analytical projects. These templates enable users to define specific insights related to market research or employee engagement, thus streamlining the evaluation process. Finally, general question capabilities allow users to draw insights from vast datasets. By utilizing these tools, organizations can enhance their understanding of AI outcomes and make informed decisions based on accurate, externally valid data.

Navigating Challenges in External Validation

Navigating the challenges in external validation requires a strategic approach to ensure that AI validity tools are employed effectively. Firstly, one must recognize the differing contexts where AI solutions operate, as external validation often involves diverse datasets and varying user needs. These factors can complicate the assessment process. Moreover, understanding how external elements influence model performance is crucial. This influence can stem from changes in data characteristics or shifts in user behavior, making it essential to adapt validation strategies accordingly.

Secondly, establishing robust feedback mechanisms can ease these challenges. By integrating continuous feedback from users and stakeholders, organizations can more effectively monitor the relevance and accuracy of their AI tools. Additionally, ensuring transparency in the validation process helps to build trust and credibility in the outcomes of AI applications. Overall, addressing these challenges leads to more reliable external validation results, ultimately enhancing the performance and credibility of AI-powered systems.

Conclusion: The Importance of Balanced AI Validity Tools

The integration of balanced AI validity tools is essential for ensuring robust and reliable assessments in artificial intelligence. These tools play a pivotal role in evaluating both internal and external validity, allowing organizations to trust their AI outputs. A well-rounded approach not only identifies potential biases but also enhances the overall credibility of AI systems.

By understanding the intricacies of AI validity tools, stakeholders can mitigate risks and foster transparency. Prioritizing a balanced methodology ensures that AI applications are not only effective but also ethically sound. This commitment will ultimately shape the future of AI by instilling confidence and promoting responsible innovation.