Introduction to Dialpad QA Assessment in Training AI Scorecards offers valuable insights into how quality assurance can transform AI training. Effective QA assessments are vital in identifying areas of improvement and ensuring a high standard of customer interactions. Dialpad QA Assessment plays a key role in enhancing AI scorecards, ultimately leading to better training outcomes for call center agents.

Incorporating Dialpad QA Assessment allows organizations to analyze performance data critically. By systematically evaluating interactions, teams can enhance communication strategies and refine training methodologies. This approach not only improves agent performance but also elevates customer satisfaction, making it essential for businesses striving for excellence in their customer service operations. Emphasizing the importance of a compassionate work environment remains crucial, as it cultivates a culture of growth and accountability.

[hfe_template id=22479]Understanding the Role of Dialpad QA Assessment in AI Integrations

The Dialpad QA Assessment plays a critical role in enhancing the effectiveness of AI integrations. This assessment acts as a bridge between traditional quality assurance practices and the innovative demands of modern AI systems. By systematically evaluating interactions, the Dialpad QA Assessment helps identify areas where AI can be improved, ensuring it meets user expectations and performance standards.

Moreover, the insights gathered from the assessment inform the training of AI models, enabling them to adapt to real-world scenarios more effectively. With precise evaluations, teams can pinpoint weaknesses in communication and refine protocols accordingly. This process of continuous improvement is essential in today's rapidly evolving technological landscape, ensuring that AI systems remain relevant and effective in meeting user needs. In summary, the Dialpad QA Assessment is instrumental in aligning AI integrations with operational excellence and quality control standards.

Importance of Integrating Dialpad with AI QA Systems

Integrating Dialpad with AI QA systems is crucial for advancing training assessments and elevating overall performance metrics. Dialpad QA Assessment enhances the accuracy and efficiency of quality evaluations by utilizing advanced AI algorithms to analyze customer interactions. This integration transforms raw data into actionable insights that can significantly improve training scorecards and enhance agent performance.

Moreover, the synergy between Dialpad and AI systems fosters a more user-centric approach to quality assurance. By automating mundane QA tasks, organizations can focus on personalized coaching and support for their agents. This not only aids in maintaining high standards but also encourages agent accountability and engagement through more effective feedback loops. Ultimately, the collaboration between Dialpad and AI creates a pathway for heightened operational efficiency and better customer experiences, which are fundamental for thriving in a competitive environment.

How Dialpad QA Assessment Enhances AI Training Scorecards

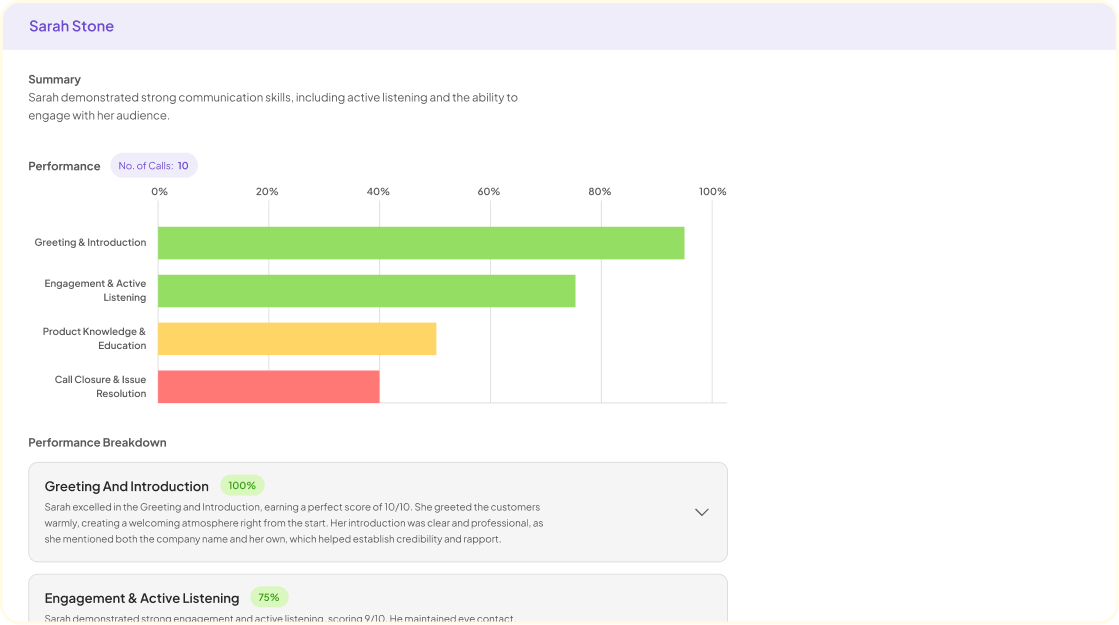

The Dialpad QA Assessment serves as a pivotal mechanism for enhancing AI training scorecards. By systematically evaluating performance metrics and qualitative feedback, this assessment identifies key areas for improvement in training processes. It helps teams understand whether time-consuming calls stem from complex customer inquiries or procedural inefficiencies. This clarity allows organizations to tailor their training programs more effectively, balancing performance with customer satisfaction.

Moreover, the insights gained from the Dialpad QA Assessment empower supervisors to provide constructive feedback. Instead of solely focusing on quantitative metrics like Average Handling Time (AHT), it encourages a holistic view that values agent experience and customer interaction quality. This approach fosters an environment where employees feel supported and encouraged to improve. Ultimately, the Dialpad QA Assessment facilitates a more nuanced understanding of agent performance, leading to enhanced overall service quality and better training outcomes.

[hfe_template id=22694]Implementing Dialpad QA Assessment for Effective Training

Implementing Dialpad QA Assessment is crucial to developing effective training programs. By utilizing this framework, organizations can create a structured environment that fosters growth and improvement. Start by integrating Dialpad with AI tools to help collect and analyze performance data. This combination allows for a more nuanced understanding of agent behavior and customer interactions, significantly enhancing training effectiveness.

Next, configure AI scorecards to align with training objectives. This step ensures that assessments focus not only on efficiency but also on customer satisfaction and agent development. Measuring the impact of Dialpad QA Assessment is vital. Evaluate key performance indicators, such as customer feedback and call resolution rates, to understand the strengths and weaknesses of your training approach. By continually refining the assessment process, organizations can empower agents to provide exceptional service while maintaining a balanced focus on overall performance.

Steps to Integrate Dialpad with AI Tools

Integrating Dialpad with AI tools is essential for optimizing training assessment through QA scorecards. The first step involves setting up the Dialpad integration itself, ensuring seamless communication between the two systems. This includes verifying API access and confirming that all necessary permissions are in place. It’s also vital to sync your user data and call logs with the AI tools for accurate analysis.

Once integration is established, the next crucial step is configuring your AI scorecards. Tailor these scorecards to reflect key performance indicators, focusing on aspects like call resolution and customer satisfaction. This creates a balanced approach that emphasizes both efficiency and quality. By incorporating these elements, the Dialpad QA Assessment can effectively guide training initiatives, align performance metrics with customer expectations, and support a more holistic view of agent effectiveness. Adopting this structured process will ultimately enhance the customer experience and improve agent performance comprehensively.

Step 1: Setting Up the Dialpad Integration

To begin setting up the Dialpad integration, it’s crucial to understand the purpose behind this step. The integration seamlessly connects Dialpad with AI systems, enabling effective training assessments through QA scorecards. Start by accessing the Dialpad API documentation, which provides detailed guidelines for integration. Ensure that your team is familiar with the system's requirements, as this knowledge will facilitate a smoother setup process.

Next, create the necessary application credentials within your Dialpad account. These credentials will authorize the communication between Dialpad and your AI tools. After gathering this information, you can begin implementing the integration. Be sure to double-check each stage for accuracy, as any discrepancies may hinder performance. Successfully completing this setup is foundational to leveraging Dialpad QA assessments for enriching training evaluations. By creating a reliable connection with AI, you'll enhance your ability to generate insightful quality assessments, ultimately leading to improved training outcomes.

Step 2: Configuring AI Scorecards for Optimal Output

To configure AI scorecards for optimal output, it's essential to ensure that they align closely with your parameters for Dialpad QA Assessment. Start by defining clear objectives for your scorecards, focusing on the key performance indicators (KPIs) that matter most for agent performance and customer satisfaction. This foundational step will guide the AI in analyzing interactions effectively, ensuring quality assessments reflect real-world performance metrics.

Next, utilize the integration features available in your selected AI tools. These features allow for the automated collection of data from Dialpad, which can enhance the scorecards' accuracy. Customize scoring criteria based on trends observed in agent interactions, tailoring the assessments to meet unique operational needs. Regularly review and adjust these settings to maintain relevance, ensuring that your AI scorecards continue to provide actionable insights and drive improvements in performance metrics.

Evaluating the Impact: Measuring Success of Dialpad QA Assessments

Measuring the success of Dialpad QA Assessments involves a multi-faceted approach that recognizes various key performance indicators. First, evaluate customer satisfaction scores, which indicate how well agents address customer needs. Connect this with agent retention rates to understand if a supportive environment correlates with higher performance. Additionally, consider the average handling time (AHT) relative to the complexity of calls, as this can reveal nuances that plain metrics overlook.

Next, analyze qualitative feedback from customers alongside quantitative results. This holistic evaluation helps identify strengths and areas needing improvement without penalizing agents for longer call durations when necessary. Finally, implement regular training and coaching sessions based on assessment outcomes, ensuring that agents feel motivated to excel while supplying outstanding service. By focusing on these specific areas, organizations can truly appreciate the impact of Dialpad QA Assessments and continuously improve their customer service processes.

Tools for Enhancing Dialpad QA Assessment Integration

To enhance Dialpad QA Assessment integration, various tools can be utilized to streamline the process and improve the quality of interactions. The integration of such tools supports a comprehensive approach to analyzing call data, enabling teams to identify trends and offer training where needed. This ensures that each assessment provides valuable insights, which can drive better customer satisfaction and agent performance.

One crucial tool in this context is Gong, which captures and analyzes conversations for performance metrics. Additionally, Chorus.ai offers similar capabilities, focusing on conversational intelligence to aid quality assessments. Jiminny is another strong option, providing real-time feedback on calls that can assist agents in improving their techniques. SalesLoft complements these tools by aiding in managing customer interactions more effectively. Together, these resources foster an environment of continuous improvement, making Dialpad QA Assessments more impactful and aligned with organizational objectives.

insight7 and Its Role in Dialpad Integration

The incorporation of Insight7 plays a pivotal role in the effective integration of Dialpad QA Assessment within training frameworks. By utilizing Insight7’s capabilities, organizations can analyze interaction data, identify performance trends, and create intuitive scorecards. This vital process allows teams to streamline their training initiatives, ensuring each agent is equipped to handle customer interactions with utmost proficiency.

Furthermore, Insight7 enhances the Dialpad QA Assessment by providing actionable insights derived from performance data. These insights help in pinpointing areas needing improvement while celebrating agents' strengths. This feedback loop not only aids in continuous development but also contributes to higher job satisfaction and lower stress levels for agents. As a result, implementing such an integration supports the broader aim of delivering quality customer service effectively and efficiently.

Other Essential Tools for Effective Integration

To optimize the effectiveness of integration with Dialpad QA Assessment, several essential tools play a pivotal role. Utilizing platforms like Gong and Chorus.ai can enhance communication analytics, facilitating nuanced evaluations of interactions. These tools offer insights that help refine training methodologies and align with performance metrics.

In addition to interaction analytics, tools like Jiminny and SalesLoft are valuable for monitoring agent performance and fostering engagement. Jiminny not only records conversations but provides intelligent feedback on calling techniques, crucial for continuous improvement. SalesLoft streamlines outreach efforts, allowing for tailored coaching based on real-time data, thereby improving overall training assessment effectiveness. By leveraging these tools, organizations can establish a holistic approach to enhance the Dialpad QA Assessment, ultimately leading to improved agent autonomy and optimal performance metrics.

Tool 1: Gong

Gong is an instrumental tool in the Dialpad QA assessment process, significantly enhancing the way training and performance evaluations are conducted. By capturing and analyzing conversations in real-time, it provides actionable insights that inform coaching strategies and customer interaction techniques. This capability ensures that representatives maintain a high quality of service while adhering to compliance standards.

Moreover, Gong helps identify key areas for improvement, allowing trainers to develop tailored coaching sessions that address specific deficiencies. These targeted sessions can significantly raise overall performance metrics, which are crucial for successful Dialpad QA assessments. By integrating Gong into the training framework, organizations can foster a culture of continuous learning and improvement, ultimately driving superior results. Through this powerful synergy, the value of effective communication and analysis is amplified, paving the way for excellence in customer engagement.

Tool 2: Chorus.ai

Chorus.ai plays a vital role in enhancing Dialpad QA Assessment by transforming call data into actionable insights. By analyzing conversations, it identifies patterns and areas of improvement, which can significantly influence training effectiveness. This tool utilizes advanced AI technology to deliver precise evaluations of agent performance, aligning perfectly with the requirements for effective quality assurance.

Using Chorus.ai simplifies the evaluation process required for Dialpad QA Assessment. It helps ensure compliance by highlighting key compliance factors that agents must mention during calls. As agents adjust their techniques based on these insights, overall call quality improves. Implementing Chorus.ai not only enriches the training experience but also fosters a deeper understanding of customer needs, ultimately leading to higher satisfaction levels. By embracing such tools, organizations can continually refine their approach to training and elevate their standard practices.

Tool 3: Jiminny

In the realm of enhancing Dialpad QA Assessment, Tool 3: Jiminny plays a vital role. This tool stands out because it allows teams to analyze conversations in detail, leading to insightful performance evaluations. By capturing nuances in communication, Jiminny empowers agents and managers to focus on key areas for improvement. This targeted analysis contributes significantly to creating effective training strategies.

The intuitive interface of Jiminny simplifies the process of reviewing and scoring call interactions. Agents receive personalized feedback based on actual performance, ensuring their growth is continuous and tailored. Additionally, this tool aligns well with the autonomy granted to agents, enabling them to reflect on past interactions without needing managerial approval. As a result, the integration of Jiminny within the Dialpad QA Assessment framework helps in fostering an environment of trust and self-improvement, ultimately leading to improved performance metrics and higher job satisfaction.

Tool 4: SalesLoft

SalesLoft stands out as a crucial tool when evaluating the effectiveness of Dialpad QA assessments. This platform excels in integrating directly with various communication tools, including Dialpad, allowing sales teams to refine their performance through insightful data. Sales representatives can access scorecard evaluations seamlessly, which aids in recognizing strengths and areas for improvement based on real customer interactions.

Moreover, SalesLoft enhances agent training by offering personalized feedback tied to actual call data from Dialpad. This symbiotic relationship not only promotes agent empowerment but also maximizes quality assurance processes. As agents enjoy greater autonomy and improved job satisfaction, they become more effective in their roles, leading to optimal performance outcomes. By analyzing this data, organizations can witness tangible improvements in average handle time (AHT) and overall team efficiency, confirming the critical nature of integrating SalesLoft with Dialpad QA assessments.

Conclusion: Leveraging Dialpad QA Assessment for Superior AI Training Outcomes

Dialpad QA Assessment serves as a pivotal element in refining AI training outcomes, ensuring that assessments align with predefined metrics. By utilizing this structured approach, organizations can focus on both efficiency and customer satisfaction. This balance is essential in mitigating long average handling times while highlighting the importance of agent performance that transcends mere speed. As companies embrace this model, they recognize the impact of supportive environments that foster agent growth and customer interactions.

Furthermore, the integration of Dialpad QA Assessment enhances the overall training framework. It equips teams with the tools necessary to provide tailored feedback, nurturing a culture of continuous improvement. This strategic mindset enables agents to handle calls with confidence, resulting in higher satisfaction levels for both employees and customers alike. Prioritizing the right metrics will ultimately lead to superior performance outcomes, further solidifying the relevance of Dialpad QA Assessment in modern AI training.

[hfe_template id='22479']